Google Introduced the Seventh Generation of its Tensor Processing Unit (TPU), Ironwood, Last Week. Unveiled at Google Cloud Next 25, it is said to be the company’s most powerful and scalable custom artificial intelligence (AI) accelerator. The mountain view-based tech giant said the chipset was specifically designed for ai infection-the computer used by an ai model to process a Query and generate a response. The company will song make its ronwood tpus available to developers via the Google cloud Platform.

Google INTRODUCES Ironwood TPU for Ai Infererance

In a blog postThe tech giant introduced its seven-generation ai accelerator chipset. Google stated that ironwood tpus will enable the company to move from a response-based ai system to a proactive ai system, which is focused on dense language models (llms), Mixture-of-expert (moe) models, and agentic ai systems that “retrieve and generate data to collaboratively deliver insights and answers.”

Notably, Tpus are custom-built chipsets ai and machine learning (ml) workflows. These acceleraters offer extramely high parallel processing, especially for Deep Learning-Related Tasks, as well as significly high power efficiency.

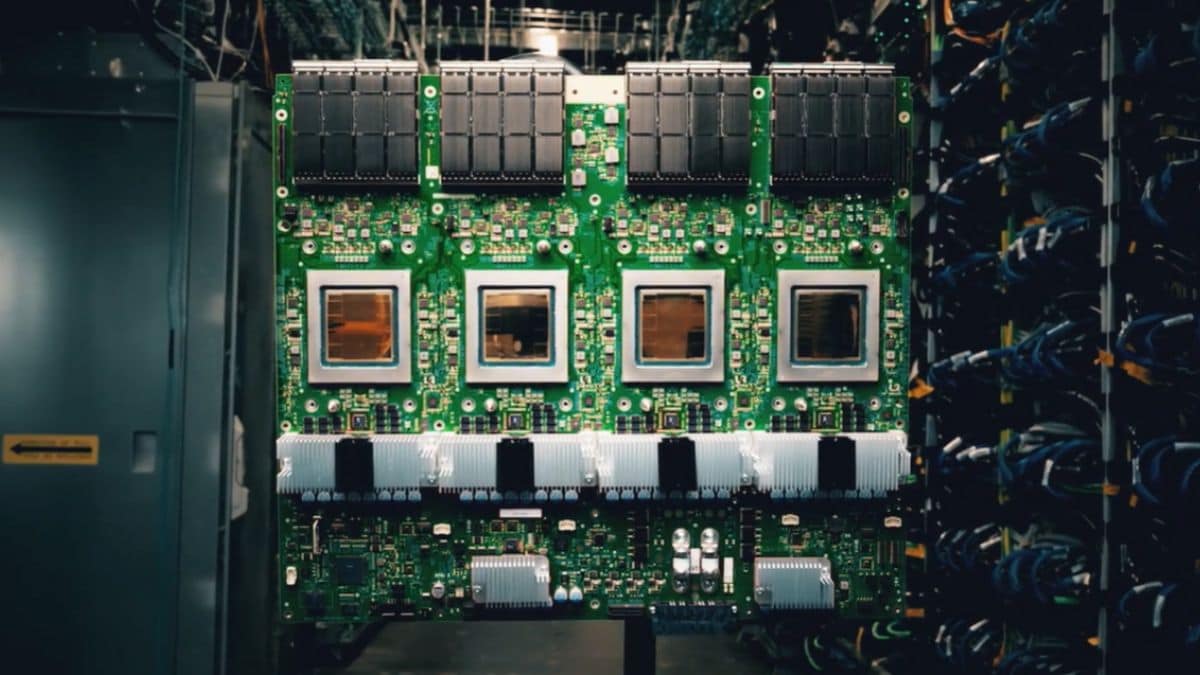

Google Said Each IronWood Chip Comes with Peak Compute of 4,614 Teraflop (Tflop), which is a considerably Higher Throughput Compared to its Predesor Trillum, Predesor Trillum, WAS UNVEILED in May 2024. The tech giant also plans to make these chipsets available as clusters to maximise the processing power for higher-end ai workflows.

Ironwood can be scled up to a cluster of 9,216 liquid-cooled chips linked with an inter-chip interconnect (ICI) Network. The chipset is also one of the new components of Google Cloud Ai Hypercomputer Architecture. Developers on Google Cloud can access ironwood in two sizes – a 256 chip configuration and a 9,216 chip configuration.

At Its most expansive cluster, ironwood chipsets can generate up to 42.5 exaflops of computing power. Google Claimed that its 24x of the Computed by the World’s Larget Supercomputer El Capitan, which offers 1.7 exaflops per pod. Ironwood tpus also come with expanded memory, with each chipset offering 192GB, sexuple of what Trillium was equipped with. The memory bandwidth has also been increased to 7.2tbps.

Notable, Ironwood is currently not available to google cloud developers. Just like the Previous Chipset, The Tech Giant Will LIKELY FIRST Transition Its Internal Systems to the New Tpus, Including the company’s’s Gemini Models, before expanding its access to developers.