As big tech porses Countless dollars and resources into aiPreaching the gospel of its utopia-critical brilliance, here’s a reminder that algorithms can screenw up. Big time. The latest evidence: you can trick Google’s AI Overview (The automated answers at the top of your search queries) Into explaining fiction, nonsensical idioms as if they were real.

According to Google’s AI Overview (via @GregjenneR on Bluesky), “You can’t lick a badger twice” means you can’t trick or deciive someone a second time after they’ve been tricked Once.

That sounds like a logical attempt to explain the idiom – if only it was weight poppycock. Google’s Gemini-Powered Failure Came in Assumping The Question Referred to an Establed Phrase Rather Than Absurd Mumbo Jumbo designed to trick it. In other words, Ai hallucinations Are still alive and well.

We plugged some Sillness into it Oyselves and Found Similar Results.

Google’s Answer Claimed That “You Can’T Golf without a Fish” is a riddle or play on words, suggesting you can’t play play goes without the Necessary Equipment, Specifically, A GOLF BALL. Amusingly, the AI Overview added the clause that the golf ball “Might be seen as a ‘fish’ due to its shape.” Hmm.

Then there’s the age-old saying, “You can’t open a peanut butter jar with two left feet.” According to the AI overview, this means you can’t do someting requires skill or dexty. Again, a noble stab at an assigned task without stepping back to fact-check the content’s existence.

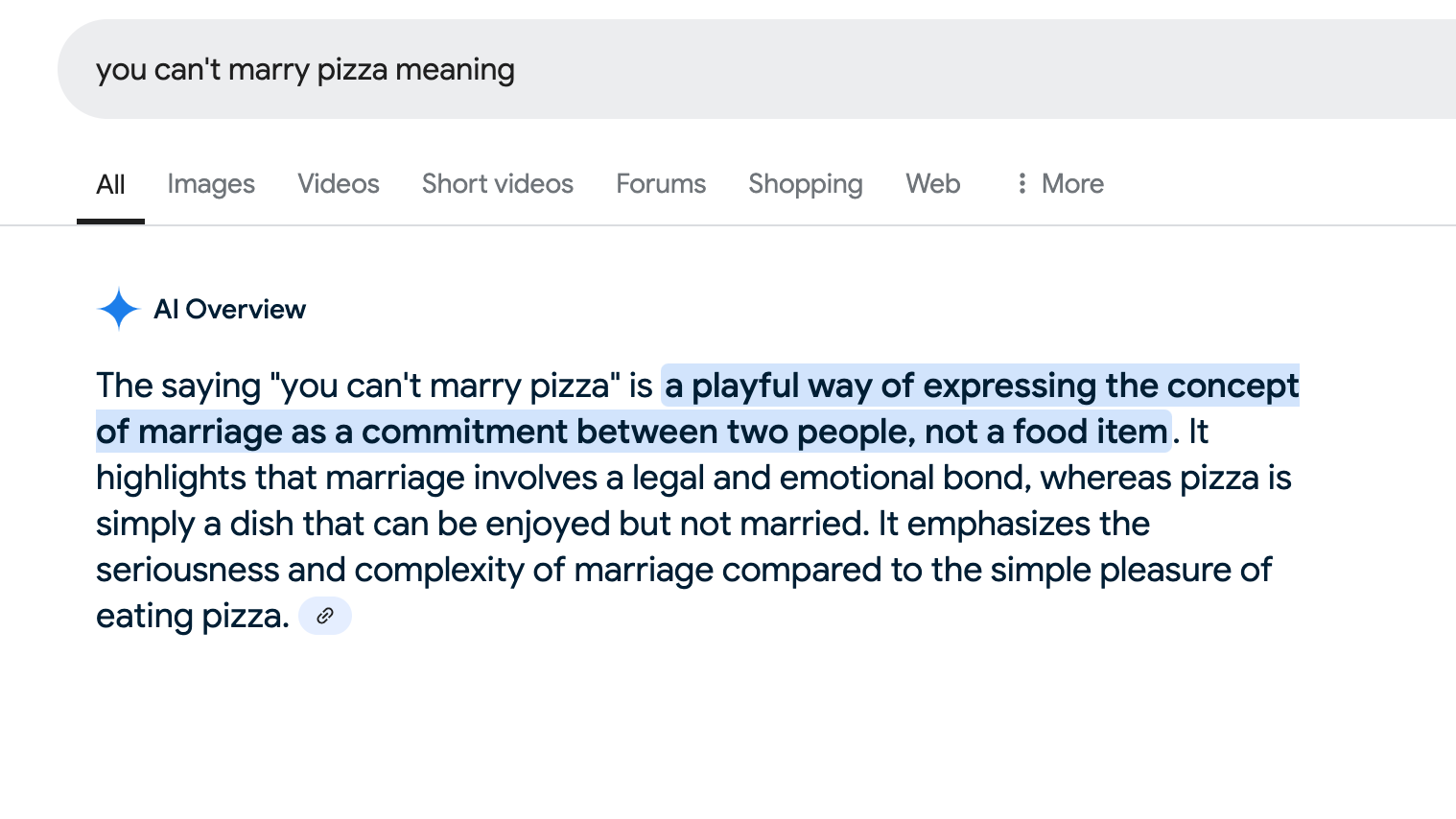

There’s more. “You can’t marry pizza” is a playful way of expressing the concept of marriage as a commitment between two people, not a food item. (Naturally.) “Rope won’t pull a dead fish” means that something can’t be achieved through for for for forr or effort alone; It requires a willingness to cooperate or a natural program. (Of course!) “Eat the biggest chalupa first” is a playful way of suggesting (Sage advice.)

This is hardly the first example of ai hallucinations that, if not fact-curved by the user, Cold Lead to Misinformation or Real-Life Consequences. Just ask The Chatgpt LawyersSteven Schwartz and Peter LoduCa, who was Fined $ 5,000 in 2023 For using chatgpt to research a brief in a client’s litigation. The AI chatbot generated nonexistent cases cited by the pair that other side’s attorneys (Quite undersrstandably) Couldn’t Locate.

The Pair’s Response to the Judge’s Discipline? “We Made a Good Faith Mistake In Failing to Believe that a Piece of Technology Could Be Making Up Cases out of Whole Cloth.”

This article originally appeared on Engadget at https://www.engadget.com/ai/You- CAN- TRICK- Googles-i-i-i-overViews-EXPLAINING- Made- Made-up-stems-ideoms-162816472.html?src=rsS